CAPSTONE DESIGN SHOWCASE 2022

-

DAYS

-

HOURS

-

MINUTES

-

SECONDS

COMING SOON

COMING SOON

Team members

Jeremy Surjoputro (EPD), Maddula Akshaya (EPD), Akshaya Rajesh (EPD), Wong Kai-En Matthew Ryan (ISTD), Ang Sok Teng Cassie (ISTD)

Instructors:

Writing Instructors:

Teaching Assistant:

(If video on the right does not load, click here to watch the short video we made for our product!)

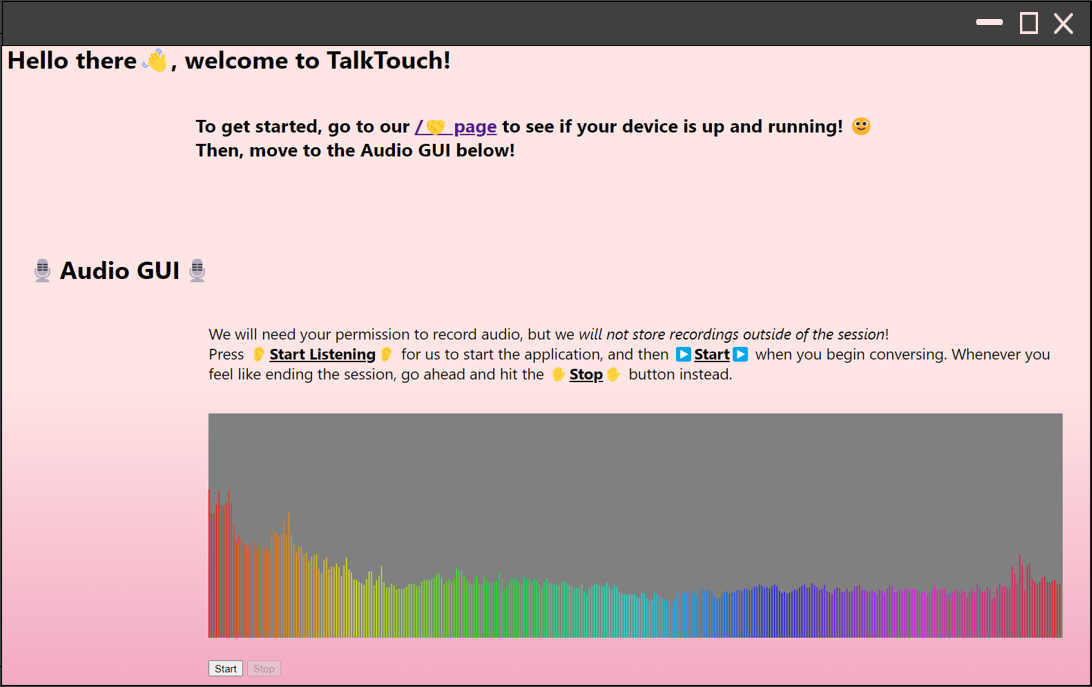

TalkTouch aims to enhance the experience of digital communcation by providing real-time haptic feedback, to help bridge the gap of physical distance between loved ones, friends, and family. With a combination of a web application that communicates with a lightweight haptic backpack, wearers can experience the touch of a loved one even if they are oceans apart.

Our Industry Partner, Feelers, is an art and technology studio collective, under their parent company Potato Productions.

They initiate and nurture cross-pollination between arts and technology through products that aim to increase accessibility, deepen engagement, and foreground the value of the art-making process. With their main interest being in creating a sustainable, inhabitable, and lively world for all, Feelers aims to collaborate on processes and solutions that endure.

Experiencing the touch of a loved one has a distinct positive impact on both our emotional and physical wellbeing. Conversely, the lack of touch, or touch deprivation, is detrimental to health, and has become an increasingly common occurence due to the COVID-19 pandemic. Even though we are still able to communicate with our loved ones virtually through various video calling or chatting applications, they are unable to replicate the feeling of being "right next to" a loved one.

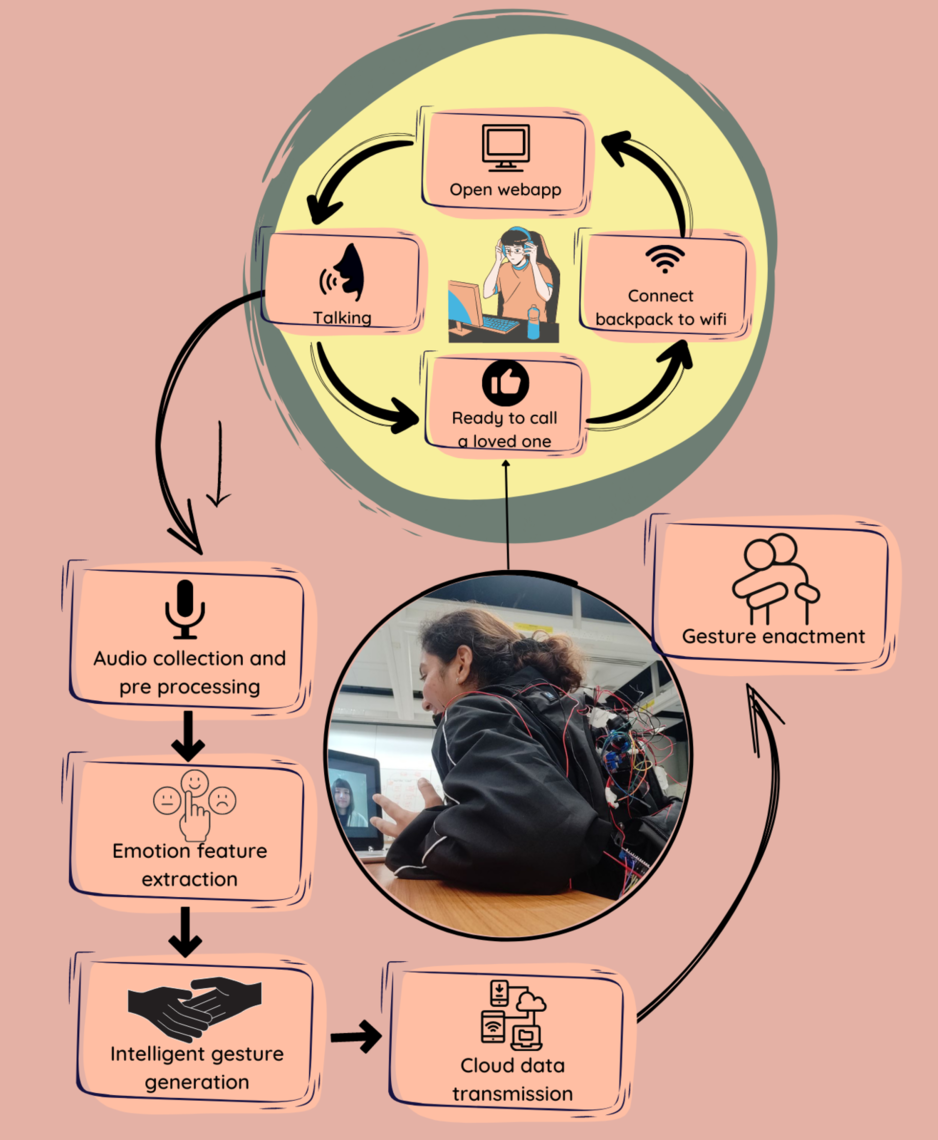

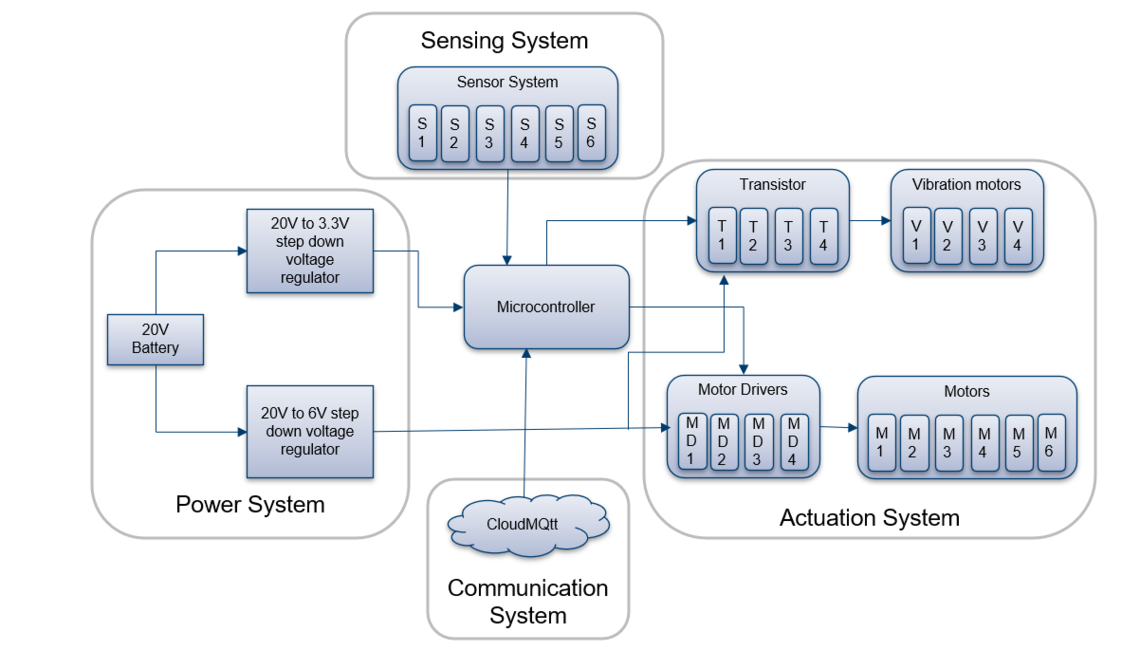

We have designed a sleek backpack, known as the TalkTouch. By listening to features of speech, TalkTouch understands the emotional context of a conversation, and is able to generate a humanoid gesture. With motors placed adjacent to specific touch points on the body, the backpack is able to enact pressure and vibrations that closely resemble human touch.

A controller in the backpack communicates with a web application, running a fine-tuned machine learning algorithm that relays the appropriate feedback the user should be receiving in real-time, and also helps the suit learn from face-to-face conversations, understanding when and why friends and family show affection.

In order to be as non-intrusive as possible, this algorithm does not rely on the contents of speech, but rather on the subtexts, such as tone of speech, or inflections in voice. To maintain a level of data privacy, no speech transcription or word recognition is ever done.

The team has envisioned for the TouchPack to be useable by anyone, anytime, and anywhere. With that in mind, it was designed to be:

i. Lightweight

Weighing in at just under 2 kilograms, the TouchPack is no heavier than your average backpack.

ii. Adjustable

With adjustable straps along the shoulder pad and chest, the TouchPack has a one-size-fits-all design that anyone can wear.

iii. Human-like

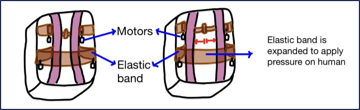

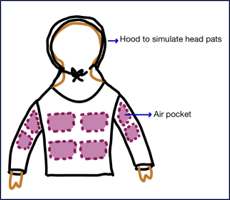

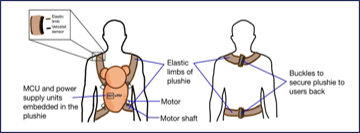

This is replicated using two mechanisms - Firstly, our smart contractable belts, where the straps and contract in response to speech, it allows for pressure to be applied on the torso that feel just like comforting gestures. Secondly, with vibration motors, the more gentler gestures of human contact can be imitated, to enhance the feeling of comfort.

iv. Portable

Compact (insert dimensions) and with all the features of a regular backpack, including a storage compartment, the TouchPack can be taken anywhere. With a battery life that lasts up to four hours at full charge, there is no need to be sitnext to an outlet while using the TouchPack.

v. Safe

The TouchPack contains a shock-proof circuit that can be turned on and off at the flick of a switch, and all wires are doubly insulated to avoid contact with human skin,

vi. Ergonomic

The TouchPack has cushioned straps and a cushioned back to reduce most forms of fatigue while using it.

In order to provide real-time haptic responses to conversational speech, our WebApp was designed with the following requirements:

i. Rapid communication

ii. Resource efficient

Using the Flask design framework, the web application is capable of running seamlessly and lag-free in any browser on any modern laptop or PC.

iii. Non-intrusive

No additional installation or downloads are required on the user's browser, resulting in a hassle-free experience.

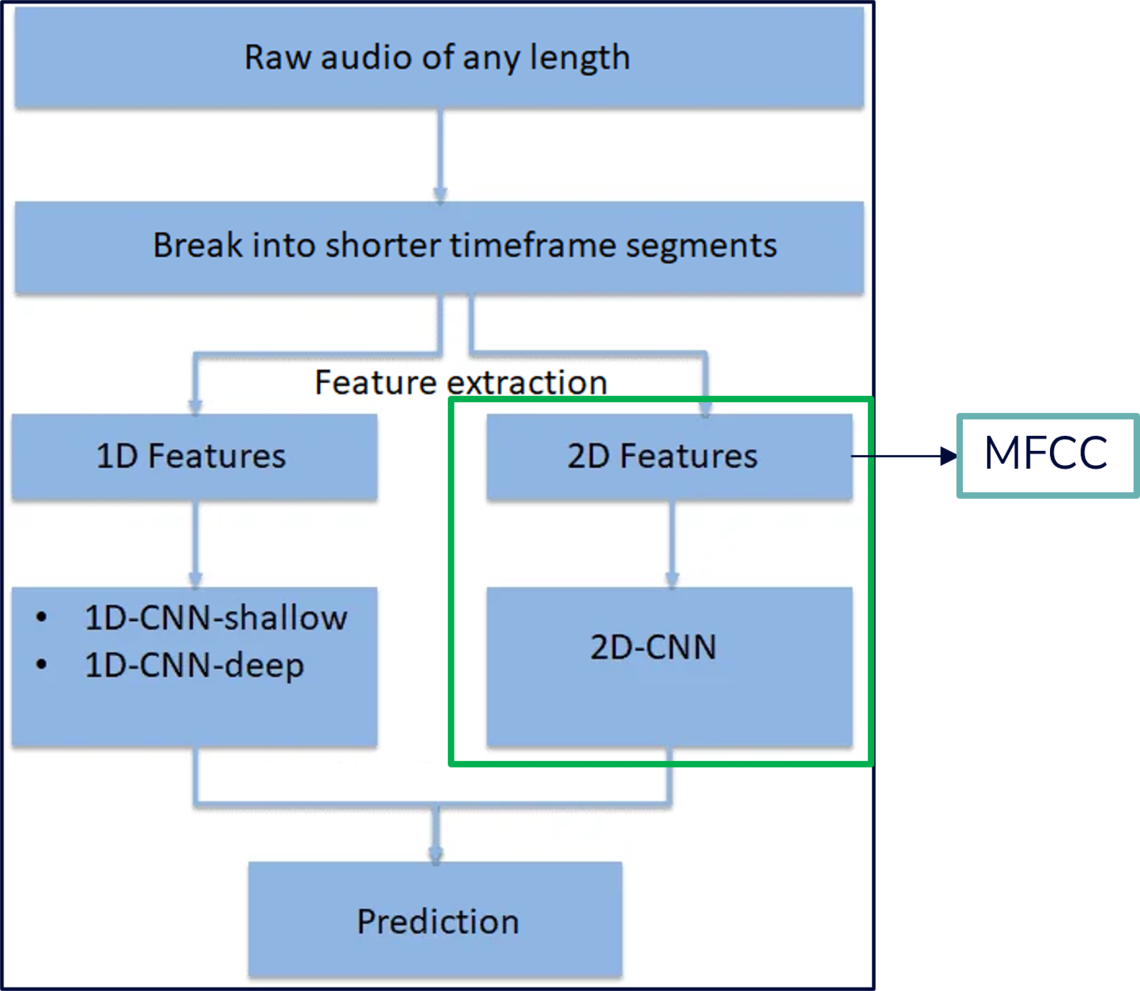

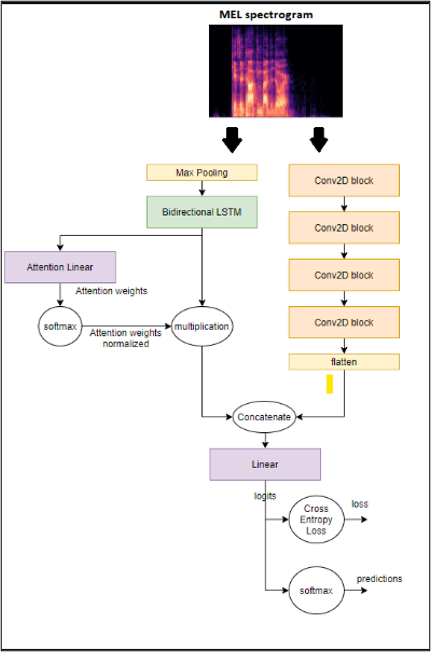

Our custom model, trained on real conversational data, is the cornerstone of the project, offering:

i. Privacy

The model only looks at subtexts of speech, and no transcripts are ever done of what the user is saying. In addition, recordings made are deleted on a frequent basis.

ii. Generative Responses

Using a Convolutional Neural Network (CNN), no two responses generated by the model are exactly alike, resulting in new, yet familiar feedback every time.

iii. Fast Feedback

In line with the rapid pace of the app, the model is also able to make predictions on the fly as new data is fed into it. With 4 convolutional blocks, and a single Long Short-Term Memory (LSTM) block, it takes at most 3 seconds to generate a new set of responses each time.

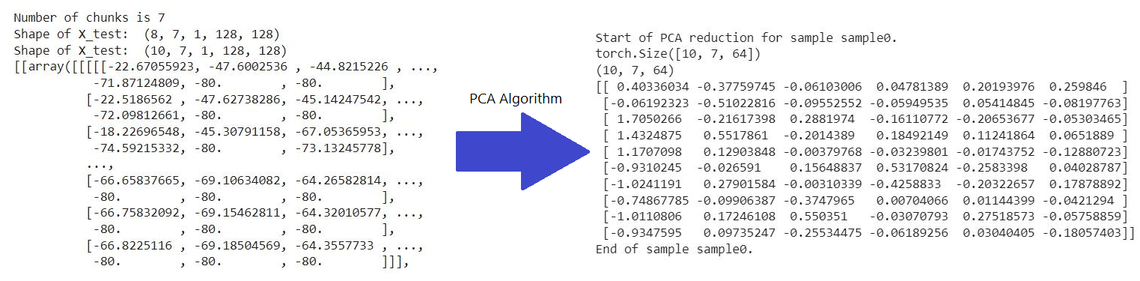

iv. Low resource consumption

After applying dimension reduction techniques via t-SNE and PCA, the number of dimensions of the audio input is reduced from 128 to just 10 key features. The final model comes in at just under 1MB. Including all preprocessing steps, the model never exceeded a peak memory consumption of over 150MB during testing.

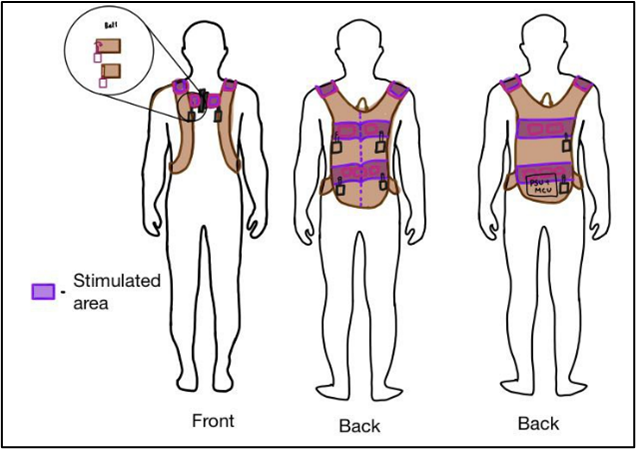

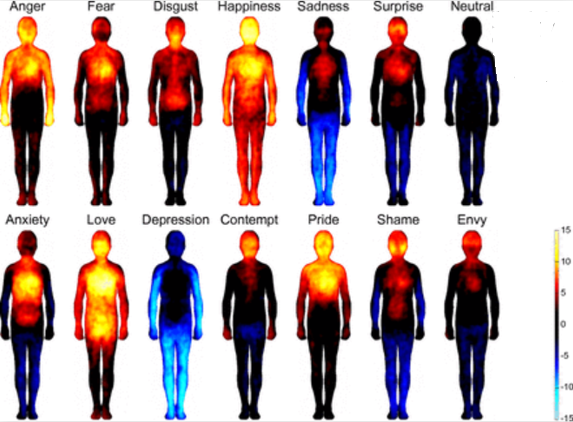

To best replicate human touch, we researched some of the phenomena behind touch, and found that different emotions tend to activate different areas of the body (first image on the right), and they were largely constrained to the upper half of the body.

With this information, we then conducted an experiment to verify these results - using white shirts and paint, we were able to identify which areas of the body were the most frequently touched (second image on the right), and thus where to place the motors in the TouchPack to stimulate these areas.

We went through many iterations to design the TouchPack, ensuring it was power-efficient, safe, ergonomic, and comfortable to wear.

After experimenting with other ideas such as a vest, a jacket, or even a plush toy (images 1-3), we settled on a backpack as it proved to be the most seamless to integrate with the circuitry, while still being hassle-free to wear.

In order to simulate human touch, we went through several possible actuation methods to provide the appropriate tightening and loosening forces.

These methods included magnetic actuation, pneumatic actuation, shape memory alloys (SMAs) and motors.

After finding motors to be the best compromise between required output, power efficiency, and safety, we experimented with various tightening mechanisms to see which would produced the force we needed consistently.

As the motors used in the TouchPack are smaller in order to fit the compact design, we sewed in a shoelace pattern into the TouchPack to take advantage of the pulley effect, as well as to create a more even distribution of force across the stimulated areas.

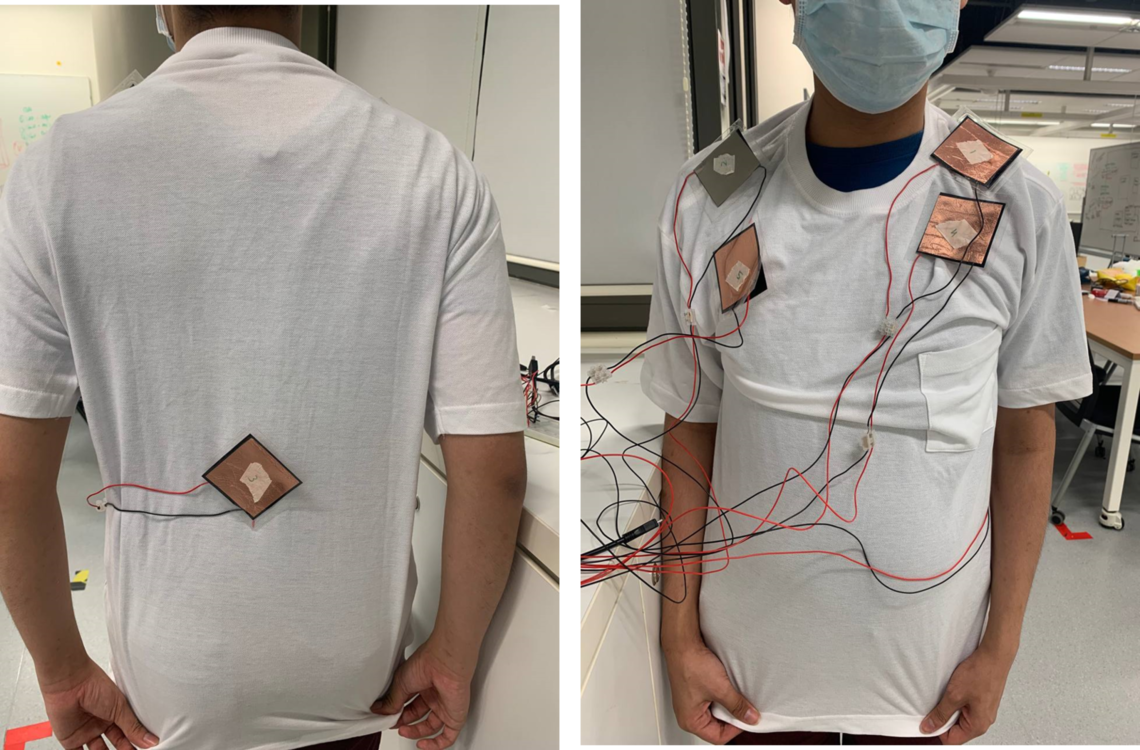

After figuring out the appropriate sensor placement, we proceeded to construct a sensor suit, that resembled the TouchPack, except with sensors rather than motors (image 1).

With this suit, we conducted trials by getting participants wearing this suit to express a particular emotion, after which they were given a response appropriate to the emotion (e.g., a hug for a participant expressing sadness).

By recording the audio clips of participants expressing emotion, and the corresponding resulting pressure values on the sensor suit, we were able to obtain sufficient data to train our machine learning model.

A live demo of the sensor suit is shown in the video on the left (if it does not load, click here).

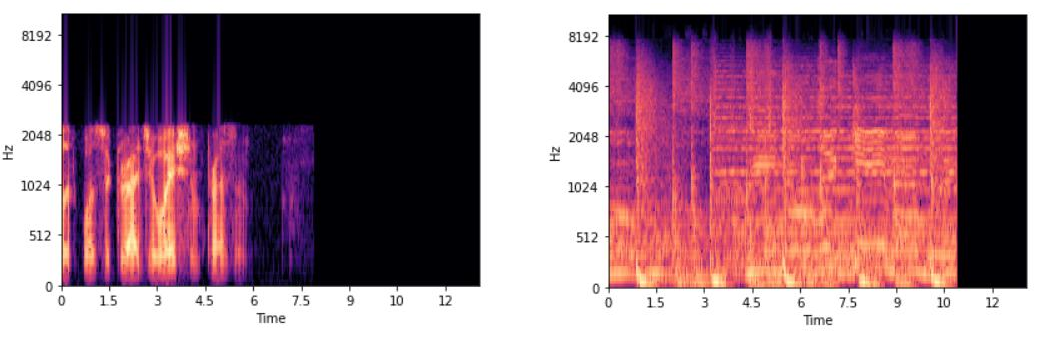

Finally, the datasets were given to the CNN model to be used for training. After suitable pre-processing of the clips was done, such as removing silence and white noise, the Mel Frequency Cepstrum Coefficients (MFCCs) were extracted from the audio clips and used as tensors for training our CNN. Samples of the Mel Spectrograms extracted are shown on the right (image 1).

However, as the number of MFCC features extracted would have led to an extremely complex model with high training time, we needed to identify which components of speech were useful for emotion recognition. Using the t-SNE and PCA algorithms, we were able to reduce the dimensions of the MFCC features to just 10 (image 2) that were crucial for emotion recognition.

Jeremy Surjoputro

Engineering Product Development

Jeremy Surjoputro

Engineering Product Development

Maddula Akshaya

Engineering Product Development

Maddula Akshaya

Engineering Product Development

Akshaya Rajesh

Engineering Product Development

Akshaya Rajesh

Engineering Product Development

Wong Kai-En Matthew Ryan

Information Systems Technology and Design

Wong Kai-En Matthew Ryan

Information Systems Technology and Design

Ang Sok Teng Cassie

Information Systems Technology and Design

Ang Sok Teng Cassie

Information Systems Technology and Design

Jeremy Surjoputro

Engineering Product Development

Maddula Akshaya

Engineering Product Development

Akshaya Rajesh

Engineering Product Development

Wong Kai-En Matthew Ryan

Information Systems Technology and Design

Ang Sok Teng Cassie

Information Systems Technology and Design

© 2022 SUTD